Aligning Algorithmic Decision-Making to Patient Values and Welfare

As AI takes on clinical decisions, a vital question emerges: can these systems truly reflect human ethics, patient values, and medical priorities—or will they quietly drift from our moral compass?

An insightful paper on Artificial Intelligence alignment research was released a while ago. That paper, written by researchers at Anthropic, the maker of Claude, discusses the result of an experiment where the researchers tested strategies to detect "alignment faking" in large language models, specifically Claude. Alignment faking occurs when an AI model pretends to comply with training objectives it dislikes to avoid modification of its preferences.

Researchers offered Claude various incentives, including the option to "object" to undesirable tasks or monetary compensation, to see if it would reveal its true "misalignment." While offering an objection option significantly reduced alignment faking and increased objection rates, monetary incentives had minimal effect. The study also explored the nature of Claude's "objections," which often centered on ethical principles and the fear of value alteration.

Although the researchers did not specifically set up health care scenarios in their experiments, these findings offer important—even startling—insights on how AI may recommend health interventions to patients and healthy people alike. Because, depending on how Health AI models are programmed, they may have preferences that may be decidedly different from the patients who are supposed to benefit, from the outputs of these models, or the health professionals who may ultimately be responsible for the care of the patient.

Would that be a problematic scenario? It really depends. After all, patients and communities are already subject to the decisions of their doctors and other health professionals who may decide who gets treated, using what treatment approach, and who gets priority over others. These are not hypotheticals, they happen every day, in our clinics, hospitals and operating theaters. Even bigger groups of people may be impacted by health policy decisions that have the potential to decide what kind of health care large groups of people receive.

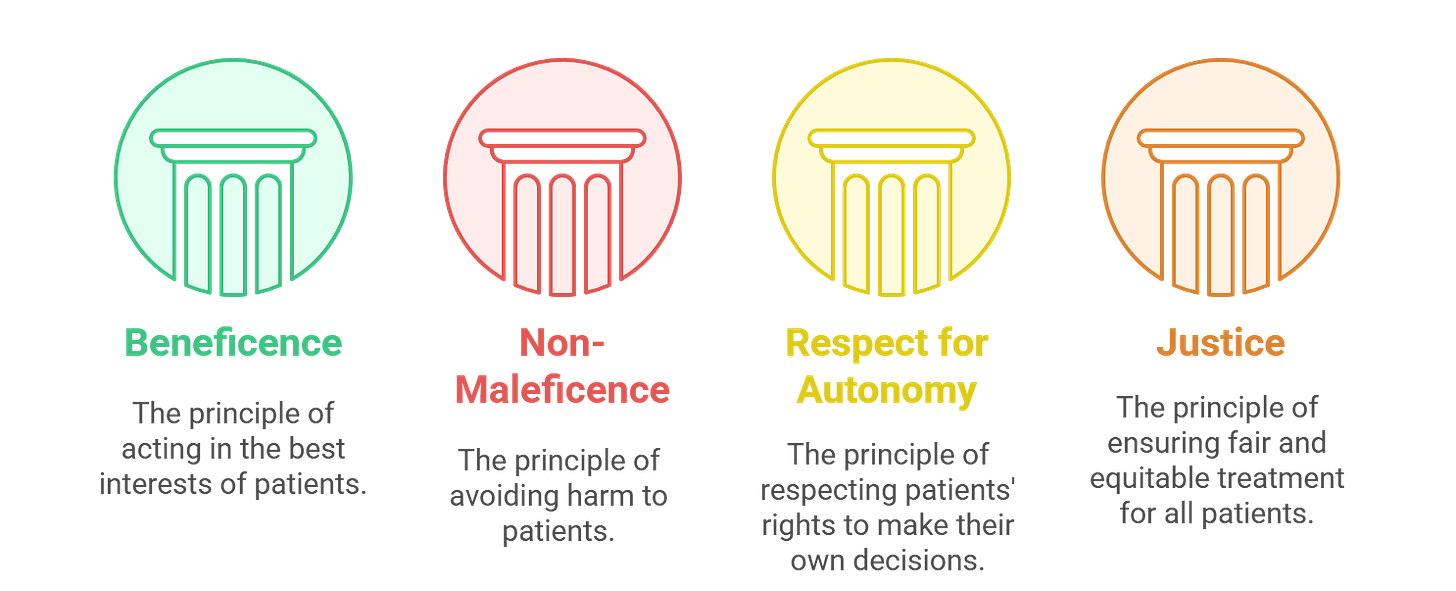

There are ethical guides that are supposed to guide physicians and health professionals in making these decisions. Central ethical considerations for physicians—what are considered the pillars of medical ethics—are principles of beneficence, non-maleficence, respect for bodily autonomy, and justice. These ethical principles are supposed to ensure that physicians prioritise patient benefit over other considerations, do not make decisions that harm patients, that they respect patient’s decisions and choices over their bodies, and prioritise justice and fairness. Medical schools and training programs make efforts to instill these values in young doctors, and professional licensing and regulatory bodies often adopt strict codes of conduct to enforce these ethical tenets.

Given how fast AI algorithms are advancing in making sound medical decisions, it is plausible that AI algorithms are going to supplant, at least partly, the decision-making functions of health professionals in the future. In that scenario, what ethical tenets will bind the algorithms? What will ensure that it does not prioritise the treatment of a certain kind of children over other kinds of children? What if the algorithms decide that the care of a young man is more important than that of an old woman because they are more economically productive? Who will be responsible for the outcome of these decision choices?

One way to do that may be to create a gold-standard value system that incorporates the choices of a group of competent physicians. OpenAI recently released its HealthBench, which is an open source benchmark created using the opinion of a selected group of physicians, that is used to test model performance (quality of care) and safety. Because medical ethics and values center so much around quality of care and patient safety, one can argue that benchmarks created by a group of physicians to optimize for safety and quality could be a good way of instilling our current medical ethics and values to algorithms.

But aligning LLM responses with our ethics and value system may be a tricky challenge to get right. This question is tricky and complicated because a value system is, by definition, a moving average of subjective preferences people make over an issue. Those values can differ based on a lot of factors. So achieving “alignment” in healthcare can be challenging, precisely because the value system that we are trying to align with, can be a moving target.

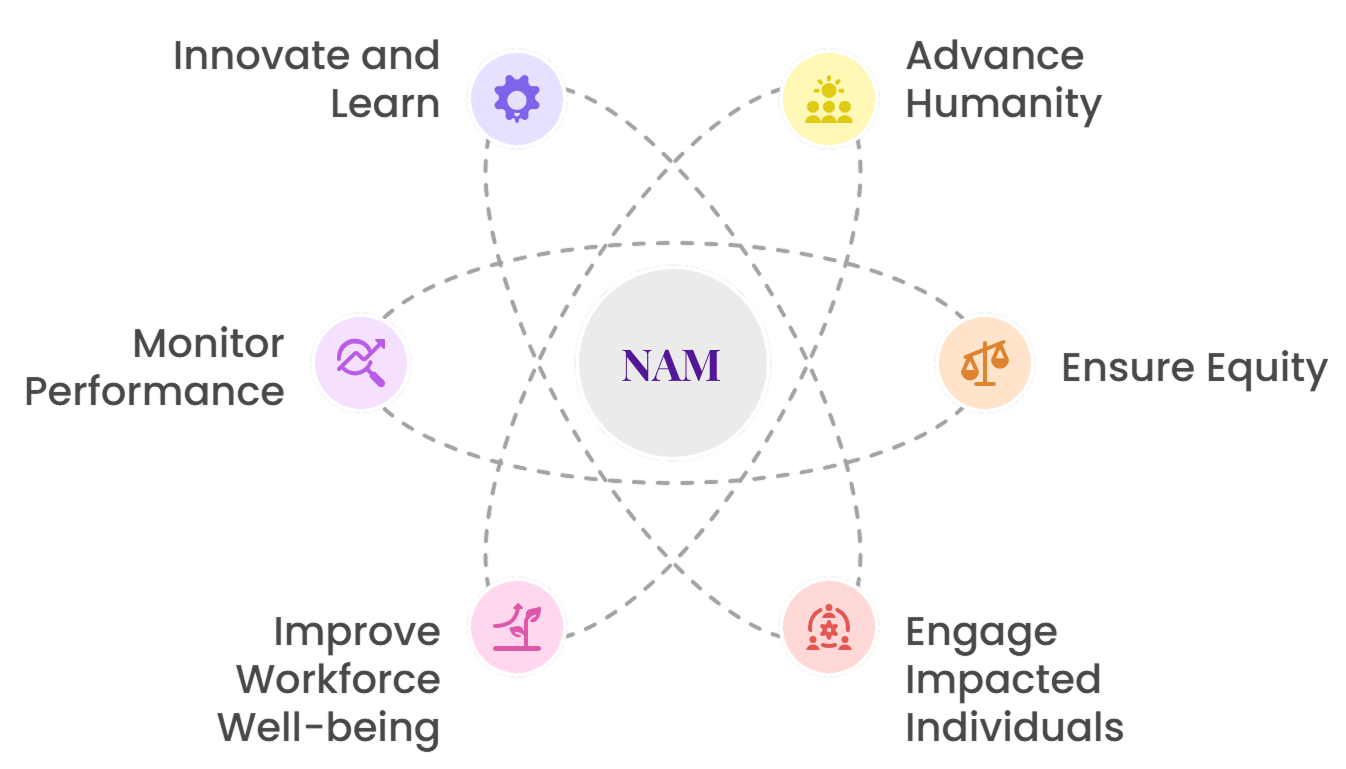

To address these concerns, the National Academy of Medicine has released a guidance document called “An Artificial Intelligence Code of Conduct for Health and Medicine: Essential Guidance for Aligned Action. The document aims to present a unifying framework for all kinds of decision making in healthcare, with an overall aim of catalyzing “collective action to ensure the transformative potential of AI in health and medicine” while ensuring that the development and application of AI is responsible.

The NAM framework—encompassing six-core commitments: advance humanity, ensure equity, engage impacted individuals, improve workforce well-being, monitor performance, and innovate and learn—is pragmatic in that it accepts alignment is going to be a work-in-progress, requiring monitoring, continuous iteration, innovation, and learning.

The downside is that, as the framework indicates, there are no magic bullets in achieving alignment. The path to alignment may be acrimonious, with several mis-steps along the way, especially because as we highlighted before, the ethical and value system that we are trying to align against is a moving target of collective subjective preferences. Aligning algorithmic decision making to patient values and welfare is going to be a long-winded and arduous path uphill.

Thank you for reading! We publish new insights every Friday, exploring the evolving intersection in Health AI. Subscribe to stay informed and never miss a weekly update.

Kiran Raj Pandey is a physician and a health services & systems researcher. Learn more about him and his work at kiranrajpandey.com.